As an integral part of Shopify's ecosystem, our mobile app serves millions of merchants around the world every single day. It allows them to run their business from anywhere and offers vital insights about store performance, analytics, orders, and more. Given its high-engagement nature, users frequently return to it, underscoring the importance of speed and efficiency.

At the beginning of 2023, we noticed that our app's performance had decreased since we started migrating to React Native. Recognizing this, we embarked on a dedicated journey to improve the app's performance by the end of the year. We’re happy to report that we have met our goals and learned a ton along the way.

In this blog post, we’re sharing how we did it and hope others use it as inspiration to make their apps faster. After all, not all fast software is great, but all great software is fast.

Defining and tracking our performance goals

Setting the right goals is vital when aiming to improve performance. A fast app is fast, regardless of the technology, and these targets should not take the technology into account. We wanted Shopify App to feel as instantaneous as possible to merchants, so we aimed for our critical screens to load under 500ms (P75) and for our app to launch within 2s (P75). This goal seemed very ambitious in the beginning because the P75 for screen loads at the time was 1400ms and app launch was ~4s.

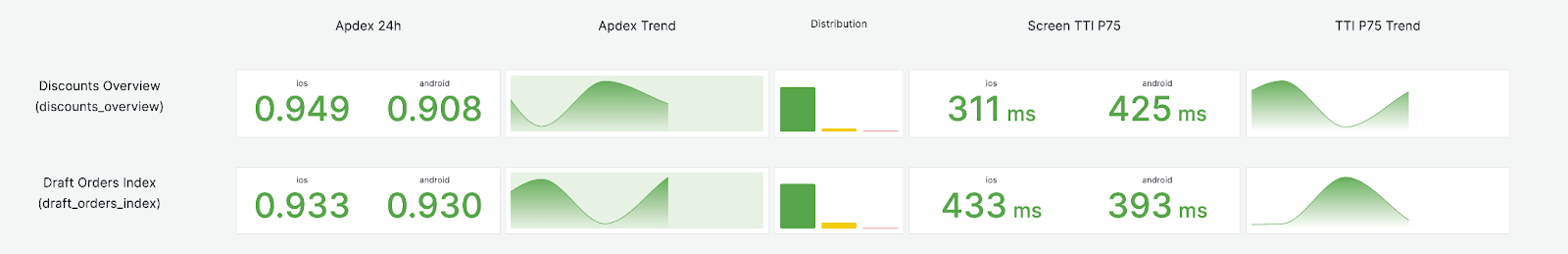

Once we defined our targets we built internal performance dashboards that were real time and supported data filtering based on device model, OS version, etc to help us slice and dice the data and also debug performance issues in the future. This also enabled us to validate our changes and track our progress as we worked on improving performance.

Performance bottlenecks

If we had to group our performance issues into common themes they would be the following:

- Doing necessary work at the wrong time

- Doing unnecessary work

- Not leveraging cache to its fullest

Doing necessary work at the wrong time

Excessive rendering during initial render

This is perhaps the most common issue that we saw across the app. On most devices, the UI is painted 60 times per second. It’s important to make sure we paint whatever is in the visible section of the screen as soon as possible and compute the rest later. This ensures that merchants see relevant content as soon as possible. A good example of this is a carousel, where you may need to render at least 3 items for smooth scrolling, but you can get away with rendering one item in the first render, and the next 2 are buffered for later. The first one will be visible much sooner.

The following is an image of Shopify App’s Products Overview tab. The red rectangle represents the viewport, anything outside wasn’t required on the first paint. It was necessary work, but done at the wrong time and so was increasing the screen load time unnecessarily.

We found several areas where this strategy helped and we built tools to help with this like LazyScrollView.

LazyScrollView

One of the first things we noticed was that some of the important screens were long, and rendered a lot of content outside the viewport. Even if only 50% of the content drawn was hidden, it was still a lot of extra work. To address this, we built a component called LazyScrollView, which is internally powered by FlashList. FlashList only renders what is visible during initial render.. This conversion resulted in significant benefits, reducing load times by as much as 50%.

Optimizing Home screen Load Time

We also found that our home screen was waiting longer than necessary to show content. There are multiple queries that contribute to the Home screen, and we realized that we could render the home screen much sooner if we didn’t wait for all of the queries to finish. In particular, any queries that displayed data that's not visible in the first paint.

Excessive rendering before relevant interaction

Certain UI elements are not required at all until there is an interaction that makes them visible, like scrolling. Drawing them earlier is an unnecessary use of device resources. Let’s talk about a few issues that we found in Shopify App and the solutions we deployed.

Horizontal list optimization

Some of our screens had horizontal lists which had 10-20 items and were rendered using ScrollView or FlatList. Like we’ve mentioned before, ScrollView ends up drawing all the items while FlatList without the right config draws 10 items. In most mobile devices only 3 were visible at a time so all that extra drawing was just wasting resources. We switched to FlashList which resolved these problems completely. FlashList’s ability to take item size estimates and figure out the rest is a powerful feature.

Building every screen as a list

Another major initiative was to rewrite all screens as lists, no matter how big or small they were. We wanted to have only drawing what was required become the default, so we built a set of tools on top of FlashList called ListSource, which only renders what's visible and updates only the necessary components, using an API that is easier and more intuitive than our previous “cell-by-cell” components. This approach not only made the initial render super fast, but also optimized the updates by automatically memoizing what’s necessary.

Setting inlineRequires to true

Setting inlineRequires to true in our metro.config.js file improved our launch time by 17%. This simple change was surprising since inlineRequires are often overlooked since the advent of Hermes. However, we found that a lot of upfront code execution can be avoided by enabling inline requires, leading to significant performance improvements. We’re not really sure why this was turned off in our config so we felt it was worth mentioning. Check your config today.

Optimizing Native Modules

We found that one of our native modules was taking a long time to initialize, which we fixed to cut down our launch time significantly. It’s always a good idea to profile native module startup time to understand if any of them are slowing down app launch.

Doing unnecessary work

This is code that isn’t necessary or is repeated across renders for no reason. Inefficient code or unnecessary allocations can also be termed as unnecessary overheads. Shopify App had a few instances of this.

Freezing Background Components

Our app, being a hybrid one, uses a mix of React Navigation and native navigation. We noticed that some of the screens in the back stack were getting updated for no reason when moving from one screen to another. To address this, we developed a solution to freeze anything in the background automatically. This reduced the navigation time by up to 70% for some screens.

Enhancing Restyle Library

We worked on the Restyle library, making it 5-10 percent faster. Restyle allows you to define a theme and use it throughout your app with type safety. While the performance cost of using Restyle was minimal for individual components, it had a compounding effect when used for thousands of components. The main issue with restyle was that it was creating more objects than it needed to, so we optimized it. By accelerating Restyle, we brought its overhead atop vanilla React Native components to under 2%.

Batched state updates by default

React Native doesn’t always batch state changes. We wrote custom code to enable state batching, which improved screen load time by 15% on average and up to 30% for some screens. By enabling state batching, we were able to significantly improve the performance of screens that were doing a lot of bridge requests to access something super small and then update their state.

Not leveraging cache to its fullest

Shopify App loads data first from cache and fetches from the network in parallel. Initial draw from cache improves perceived loading greatly and the data is still relevant to merchants who come back to the app frequently. Cached data isn’t always outdated and we need to leverage it as much as possible. We took this very seriously and started looking into how we can increase our cache hits as much as possible.

There’s this notion that loading from cache means showing irrelevant data and that isn’t true for users who open the app frequently. You can always tweak how long data remains cached. If you don’t cache data, you might want to reconsider.

Tracking Cache Misses and Hits

We started tracking cache misses versus cache hits and found that only 50% of the users were loading from cache first. This was less than expected for some of the screens like home which should load from cache more often. After further investigation, we found an issue with our graph QL cache and resolving it increased cache hits by 20%.

Pre-warming the cache

Based on these numbers it was clear that users loading from cache have a much better app experience, so we wanted more of them to get data from cache first and we also wanted cached data to be more relevant and not outdated. For our critical screens we found common trigger points where we could implement a way to pre-warm the cache. With this strategy in place we observed that for key screens as much as 90% of merchants now see data from cache first. This significantly lowered our P75 times because we completely eliminated the lag introduced by the network.

Conclusion

Our year-long journey to improve the performance of our mobile application has been challenging, enlightening, and ultimately rewarding. The app launch (P75) is 44% faster and screen load times (P75) have reduced by 59%. This is a massive win for our merchants.

This journey has confirmed that performance improvement is not a one-time task, but a continuous process that requires regular monitoring, optimization, and innovation. We've learned that every millisecond counts and that seemingly small changes add up to have a significant impact on the overall user experience.

We're proud of how fast our app is now, but we're not stopping here. We remain committed to making the app as efficient as possible, always striving to provide the best experience for our merchants.

We hope that sharing our journey will inspire others to embark on their own performance improvement initiatives, demonstrating that with dedication, creativity, and a data-driven approach, significant improvements are possible.